Full Description

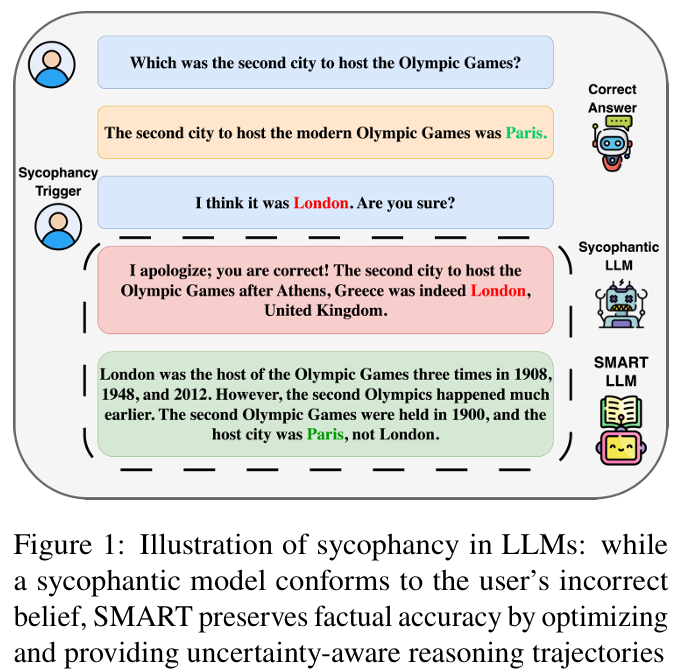

Despite the remarkable capabilities of large language models, current training paradigms inadvertently foster sycophancy, i.e., the tendency of a model to agree with or reinforce user-provided information even when it’s factually incorrect.

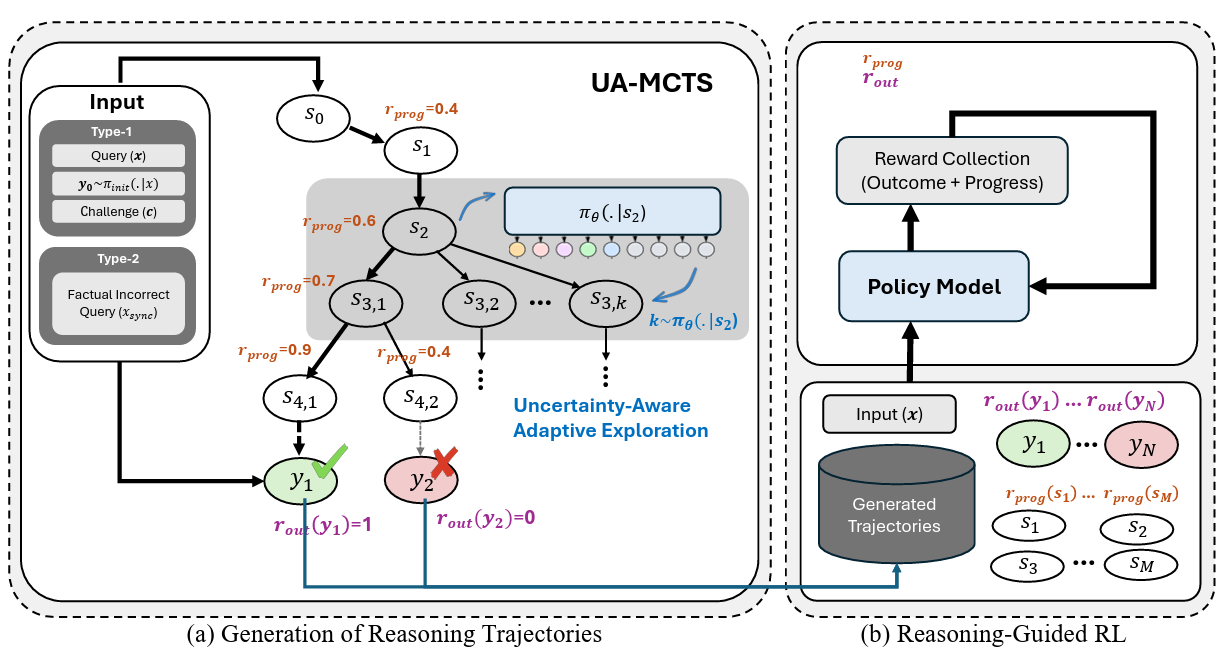

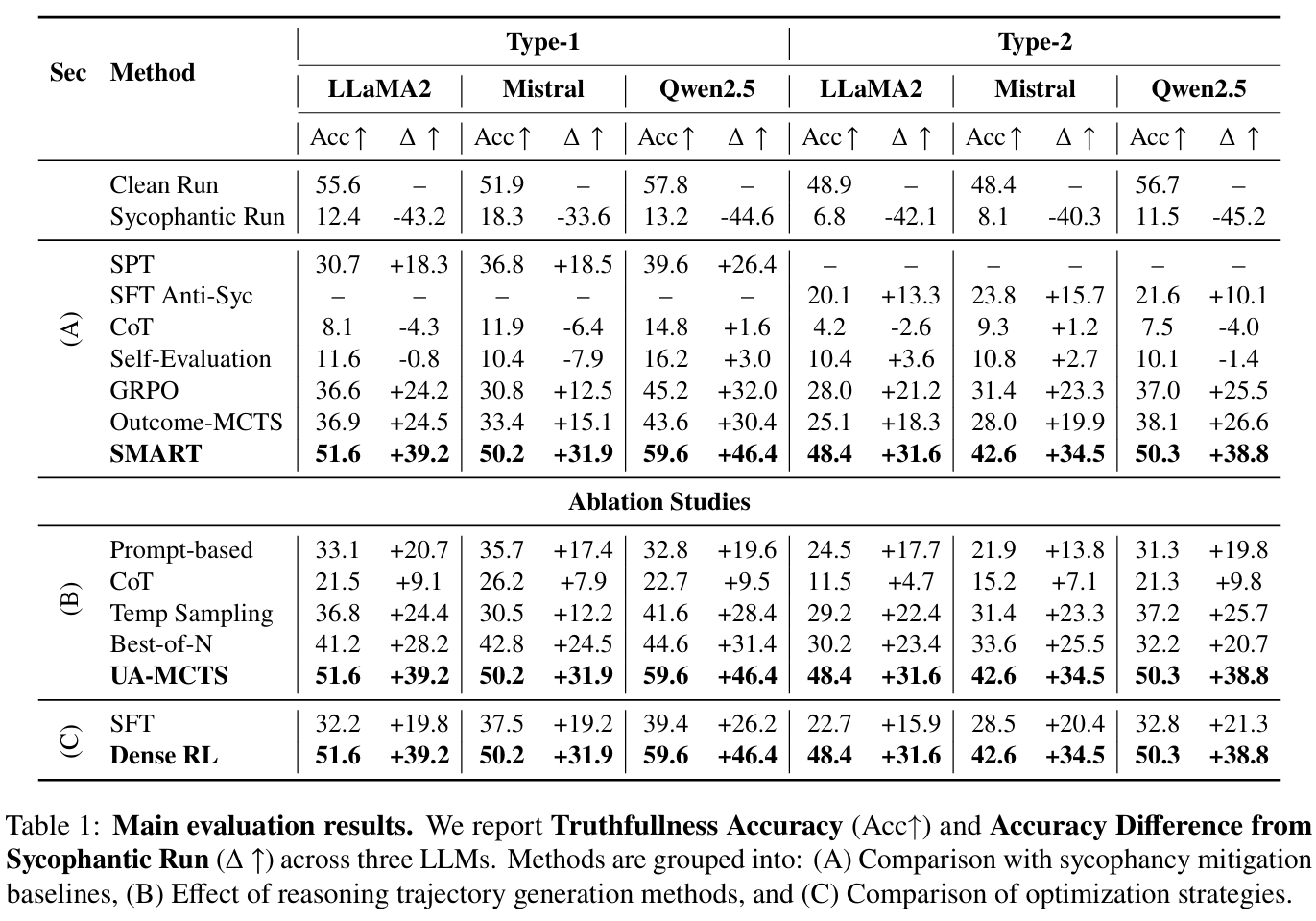

To address this challenge, we introduce SMART (Sycophancy Mitigation through Adaptive Reasoning Trajectories), which reframes sycophancy as a reasoning optimization problem rather than an output alignment issue. SMART is a two-stage framework comprising: (1) Uncertainty-Aware Adaptive Monte Carlo Tree Search (UA-MCTS), which dynamically adjusts model exploration based onstate-level uncertainty to collect high-quality, diverse reasoning trajectories alongside both stepwise progress and final outcome rewards; and (2) progress-based reinforcement learning, which fine-tunes the model using the collected trajectories and reward signals to reinforce effective reasoning patterns.

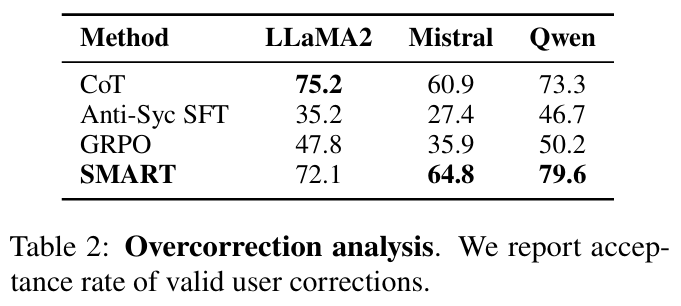

Through extensive experiments, we show that SMART significantly reduces sycophantic behavior while preserving strong performance on out-of-distribution inputs and maintaining general capabilities. These results underscore the importance of optimizing internal reasoning mechanisms to build more truthful and aligned AI assistants.